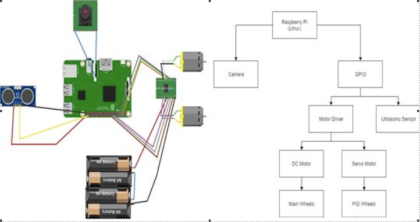

raspberry pie image recognition

Specify import number as npimport cv2cap = cv2.VideoCapture(-1) while True: _, frame = cap.read() gray = cv2.cvtColor(frame, cv2。COLOR_BGR2GRAY) cv2.imshow(real’, frame) cv2.waitKey(1)&0xFF == 27:break cap.release()cv2.destroyAllWindows()

Specify import number as npimport cv2cap = cv2.VideoCapture(-1) while True: _, frame = cap.read() gray = cv2.cvtColor(frame, cv2。COLOR_BGR2GRAY) cv2.imshow(real’, frame) cv2.waitKey(1)&0xFF == 27:break cap.release()cv2.destroyAllWindows()

It’s lane recognition

It’s lane recognition

It’s lane recognition

インポート cv2import numpy as npimport time def make_interfinates (img, line_parameters): slope, intercept = line_parameters y1 = = img.shape[0] y2 = int(y1 * (1/2)) x1 = int((y1 – intercept)/slot) x2 = = int((y2 – intercept)/slot) return np.array([x1, y1, x2, y2]) def average_resh_fit_slot (img, lines): = For the line [], left_fit = [] The line is shown in . x1、y1、y1、x1、x2A = np.vstack([x, np.ones(len(x))]).T-slope, intercept = = np.linalg.lstsq(A, y, rcond = Slope) [0] x_fit = -((null-fit)) / slope ((null, intercept)) elif x_fit.html > 400: right_fit.html ((intercept, intercept)) left_fit_average = np.htsq(left_fit, 0) right_heterverage (right_fit: lightline = make_display_like(img, right_fit_average) except: left_line = 0 right_line = 0 pass return [left_line], [right_line] def display_lines(img, lines)—For rows that are not none: x1, y1, x2, y2 = = line.reshape(4) cv2.line_image(x1, (x2, (y1, (: gray = cv2.cvtColor(img、cv2).COLOR_BGR2GRAY)_, binary = cv2.165 (グレー、190、255、cv2。THRESH_BINAL) binary_threshold = cv2.adaptiveThreshold(バイナリ、255、cv2).ADAPTIVE_THRESH_GAUSIC_C、cv2。THRESH_バイナリINV, 11, 5) blur = cv2。ガウス Blur(binary_gaussian, (5, 5, 0) blur = cv2.bilateralFilter(blur, 9, 75, 75) canny_image = cv2。canny(blur, 80, 120) は、canny_image## 인로 프작 ## 미지# Returns cap = cv2.VideoCapture(r’E:\samples\play\sample2_1.mp4′)last_time = time(# 선의화 작업h = 640w = = 800pts1 = np.720032([[300, 650, 580, 460], [720, 660], [6000])pts2 = = np.732([[200, 640], 200, 0], [600, 6000], [600, 640])])]” ]M = cv2.getPerspectiveTransform(pts1、pts2)## [자모에트] 한모식 패에게 보내는 답한 l1= 0l2= 0l1_copy= Nonel2_copy= Innext2_coord_average= 400、True: ret, frame = cap.read(img2) img2 = cv2.warpPerspective(frame, M, (w, h))try: canny= make_canny(img2) lines.cv。l1 == 0 and l2 == 0 の場合、HoughLinesP(canny, 3, np.pi/180, 100, np.array([]), 100, 400) print(len(lines)): l1, l2 = average_copy_module(canny, l2) = = average_modules_module(copy): l1_copy, l2_moduleaverage avereaserale(キャニ, If l1_copy is not None:try:l1_copy[0][0] > l1[0] [0] +20 or l1_copy[0] < l1 [0] – 20:l1 = If l2_copy is not None: l2_copy[0] [0] +20 or l2_copy [0] < 0:2 l2 = l2 l2 with the exception of 20 = Passes through 20][0] < l2[0][0] –